Private 5G networks are revolutionizing remote broadcasting in media and entertainment through high-speed, low-latency connectivity. This white paper examines how this technology enables real-time HD video transmission and streamlined remote production while reducing the costs and complexity of traditional cabled broadcasting.

Immersive 5G broadcast content acquisition networks for sporting, entertainment, and other types of events are a game changer. They offer a completely new experience for participants and live and remote spectators. Viewers can experience the action from the perspective of the athletes or entertainers, in real time or on demand, heightening the sense of almost being there. This whitepaper serves two key audiences: broadcast professionals seeking to enhance their contribution networks for immersive live content, and marketing strategists exploring new ways to engage remote viewers. Technical leaders will find detailed network architecture guidelines for implementing immersive broadcasting solutions, supported by real-world case studies.

In past events, the experience by remote spectators differed significantly from those of the live attendees. Live attendees enjoy the buzz of the stadium, with a full view of all event activities. In the athletics competition or gymnastics, live attendees could decide which of the parallel events to watch, and switch at their will between competitions. For single sports events like Rugby or Volleyball, spectators could observe off-court action or get the vibe from the ranks. Experience for remote viewers however varied significantly! Content was typically captured by a small number of Fixed TV cameras deployed throughout an event location. These cameras had to be cabled to provide power and connectivity, and were also limited by physical or regulatory restrictions in stadiums. The content captured by these cameras by definition was limited to one perspective. Furthermore, the production team decided which stream to broadcast to the various broadcasting studios, which often concentrated on consuming the streams most relevant for their particular market (e.g. preferring to broadcast those events where a nation’s athletes competed). More recently, cameras anchored on cables above the field of play in stadiums provide a more dynamic and aerial camera angle. In addition to live broadcast video, photojournalists were also trying to permanently capture the action of the competitions. They are assigned to specific areas on the field of play, and have to go to special desks to connect to the internet to upload the possibly thousands of pictures captured. This reliance on wired high bandwidth connections delayed the availability of such photographs to news corporations which purchase the publication rights from the individual journalists. The journalists also compete with each other in the sale of their shots, so the wired upload process also affects their bottom line.

Organizers of modern sports events are starting to have a different vision: to immerse the remote spectators – and even live attendees – into the events as if they were the athletes? What if instead of a small number of fixed TV cameras hundreds of smartphones or pen-cameras captured events of the competitions from the athlete’s perspective, moving to where the action is? What if live spectators could follow events on their smartphones? And what if photojournalists could make their shots available to news corporations in near-real time, immediately uploading their content from the stadiums rather than waiting until a wired network connection was within reach? This kind of immersive experience required a radically new solution architecture, built around a Private 5G network.

Current Broadcast Architectures and Challenges

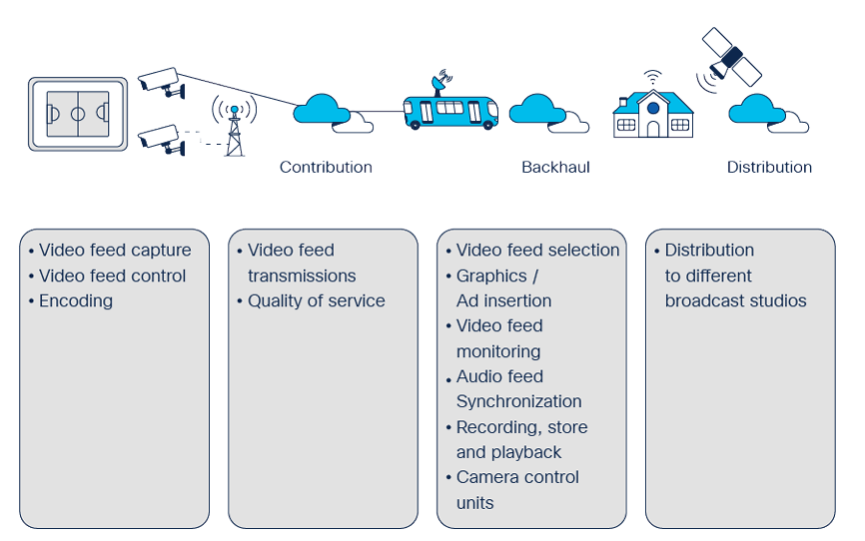

Immersive broadcast networks have significantly different requirements than existing broadcast networks. In typical broadcast network today a small number of high-quality TV cameras are installed in key location in sports venues. These cameras capture the media streams and encode these video feeds for transmission. They are often still connected via a wired access link to a content acquisition (aka contribution) network, which are still common to provide the required guaranteed bandwidth and low latency for the captured media streams. More recently cameras are equipped with cellular interfaces have also become available. The implementations of such wireless transmissions is typically based on Coded Orthogonal Frequency Division Multiplexing (COFDM) technology in custom frequency bands. In many cases, multiple cellular interfaces can be bonded together to provide the required transmission quality and ensure that the different media types (voice, video, control) can be successfully transmitted. The contribution network – typically based on IP / Ethernet or multiple cellular networks – aggregates the media streams from different cameras and forwards the feeds to the Production suite. In many venues, these are hosted inside an Outdoor Broadcast (OB) VAN for post processing, where the production team performs actions such as Decoding of the video feeds Insertion of graphics elements Storage of video feeds Synchronization with audio streams (e.g. from commentators) Selection of feeds for broadcasting Controlling camera settings Etc. In some cases, video feeds may also be forwarded to a physical production studio via a Satellite or wired network (backhaul). The processed media is then offered to various digital rights holders or partners. These could be for example broadcasters in specific countries which subscribe to the content. The purchased live content is then disseminated to the viewers of such broadcasters using their local distribution network. Figure 1 illustrates the high-level architecture of video current broadcast networks, and further details can be found in [3], [4], and [5].

Figure 1 – High-level Broadcast Architecture

The second type of media produced in such live events are regular still photographs by Photo journalists. These are typically restricted to specific ‘fenced-off’ locations within the stadiums or locations so as not to disrupt the events. Analogous to the live video feeds, any photos taken by journalists are offered to news agency companies such as Reuters, Associated Press, AFP, Deutsche Presse Agentur etc. Journalists are independent agents competing against each other, so the timely upload of their photos is critical. In many cases, uploads require a wired connection due to the sheer number and size of photographs. Again, cellular networks are increasingly being used by journalists to provide content faster. The broadcast architecture described above suffers from three main challenges.

- Wiring

While wiring TV cameras provides the necessary bandwidth to capture video feeds, it also limits the area of operations of camera operators, and hence the perspectives of the event that they can capture. Furthermore, wiring TV cameras often comes with operational complexities. The wires have to be installed, which is typically a time-consuming process for temporary events. The installation requires a proper site survey to determine where the cables can be placed. Safety guidelines of the stadiums have to be adhered to, such as installing proper wiring ducts. Also, the deployed wires must not impact stadium operations, for example impact spectator or athlete / performers foot traffic.

- Bandwidth guarantees of public cellular and Wi-Fi wireless networks

Wiring challenges could be remediated by using cellular or Wi-Fi connectivity. Many modern TV cameras already provide multiple 4G/5G cellular interfaces. Photo cameras can be connected or tethered to a mobile gateway with a Wi-Fi or cellular uplink. However, public 4G/5G cellular connections or Wi-Fi prove impractical in large sports events or concerts. The sheer number of spectators – often reaching tens of thousands – can often saturate any Wi-Fi or public 4G/5G cellular network. Furthermore, current public 4G/5G networks are optimized for downloads. The typical traffic pattern for consumer services is to request content from the internet, and so cellular networks are configured to provide more bandwidth on the air interface towards cellular handsets. Furthermore, many entertainment environments also host additional wireless networks for event staff (e.g. Walkie-talkies) which may cause interference. To use such networks for TV broadcast streams, the air interface has to guarantee bandwidth from the end devices (TV or photo cameras) and free from interference.

- Technical Challenges of current Digital DVB-T cameras

Current wireless digital TV cameras based on DVB-T have several challenges from an RF perspective, such as the available channel width, choice of modulation scheme, as well as managing the video coding and delay. In such environments, channel widths of 20 MHz is traditionally provisioned for such cameras, presenting a challenge to accommodate tens or hundreds of video endpoints envisaged for an immersive broadcasting network. DVB-T allows for different modulations schemes to be used, e.g. QPSK, 16-QAM, or 64-QAM. But these offer different levels of capacity that again may impact the number of video endpoints in the network. The bandwidth requirements for TV cameras based on the existing DBV-T technology is thus not suitable to scale to a large number.

Haivision’s Broadcast Transformation report [6] provides deeper insights into the key trends in the broadcast market. The report highlights for example that:

- 60% of video contribution networks are based on cellular technologies.

- the majority of broadcasters rely on hardware encoding and codecs (73%). The use of HEVC encoding is on the rise.

- Secure Reliable Transport (SRT) is the preferred transport protocol for video content. 5G is already used or planned to be used by 74% broadcasters.

- 84% of deployments rely on cloud-based technologies, for example to support stream routing, remote collaboration, Media asset management, live production master control, or video editing.

- Artificial intelligence will make inroads into the workflows – being flagged in the report as the top impact on broadcast production in the next 5 years.

Additional insights into current broadcast architectures for sporting events can be found in [7].

Requirements for immersive broadcast networks

Immersive broadcast solutions offer enhancements over such traditional broadcast networks, especially by accommodating a much larger number of camera sources that may even be mobile. Imagine professional TV cameras being connected over a reliable and secure cellular network that is able to offer bandwidth and latency guarantee. Or video footage being captured by pen cameras or smartphones by athletes or performers themselves. Such Immersive video capture places additional requirements particularly on the content acquisition network:

- Cheaper video capture devices

The current professional TV cameras – digital or analog – are very expensive and not suitable to be deployed in large numbers. To realize the goal of immersive video capture from the athlete’s or performer’s perspective, the number of cameras has to increase significantly. Consequently, these devices need to become significantly cheaper to ensure a positive business case.

- Uplink-centric wireless transport

An immersive broadcast network architecture has to be able to offer sufficient capacity for uplink-centric transmission of high-definition video flows that now may come from hundreds of sources, ranging from professional TV cameras, to the latest handheld smartphones or photo cameras. TV cameras typically generate between 40-60 Mbps per flow. Handheld smartphones or pen cameras require between 6 – 8 Mpbs on average. A typical journalist generates 1000s of photos during an event, each between X and Y in size.

- Bandwidth Guarantees

The network must be able to guarantee bandwidth for the various types of cameras. While codecs are able to mitigate some bandwidth fluctuations by supporting variable bit-rate encoding and playback buffers, a minimum bandwidth level per device has to be maintained for the stream to be usable by the TV producers. This requirement typically implies the deployment of QoS mechanisms in the network such as minimum bandwidth guarantees.

- Low latency

For video feeds from professional TV cameras, pen cameras or handheld smartphones to be viable for broadcast transmission, they have to arrive at the production studio with minimal latency and jitter. Producers expect and end-to-end latency of around 100ms from glass-to-glass (from the Camera to the production /mixing function) for the feed to be usable.

- Interference-free transmission

The possibly hundreds of video cameras are highly mobile in an immersive broadcast video architecture. Cameras are no longer tied to a restricted area of operation. They may appear anywhere in the venue. Consequently the cameras must be protected from interference from wherever they move. The wireless communication used for the broadcast network cannot be impeded by competing wireless communications, such as Wi-Fi hotspots by spectators, Wi-Fi networks for venue operations (e.g. vending), walkie-talkie, or similar sources.

- Nomadic solutions

Many events are temporary in nature, often only occurring on an annual basis or at best a few weeks in a year. Contribution networks are therefore typically not installed as permanent fixtures in a venue for cost reasons. This calls for immersive broadcast networks to support nomadic operations, which can easily be stood up and torn down for the event duration. This applies to all of the components in the immersive broadcast architecture – from access network to connect cameras, to the transport network towards the OB VAN or production studios.

- Comprehensive

Management and Observability As a corollary to the video contribution network having to be nomadic, manageability and observability also has to cover all solution components. Operators have to be able to understand the state of the network in real-time to meet the reliability requirements supporting real-time video feeds. In case of failures of local components, the local support staff has to be able to react quickly to restore the service. The management and observability stack of the solution should support both cloud-based and local operations.

- Reliability

Live events are highly critical, often reaching millions of spectators. The contribution networks thus cannot tolerate any component outages that may lead to a loss of the video feeds. While the loss of individual video feeds can be tolerated for short periods of time (producers in an immersive broadcast have many more feeds to choose from), transport components in the network such as radios, switches, or cellular core functions must be deployed with resilience to outages.

- Mobility

To immerse spectators in live events, the number of video sources is increasing by at least an order of magnitude. Support for smartphones and pen cameras also implies that these are more mobile throughout the event locations. The network thus needs to accommodate such mobility by seamlessly allowing a camera device to connect to different radios when moving around the site.

- Segmentation

Live content is likely going to be captured by different organizational actors. For example, the rights to the content captured by photo journalists, TV camera crews, or athletes / performers themselves belongs to different organizations. The immersive broadcast network needs to honor these organizational requirements by supporting end-to-end segmentation of content with capabilities such as separate VLANs, VRFs, DNNs, or even separate spectrum bands.

- Deployment flexibility

Sports and entertain events are sometimes organized in non-standard locations. Events such as the Tour-de-France, FIFA championships, Sailing competitions, or Olympics Events change locations frequently. Sometimes, these events even happen in historically protected locations that need to be preserved. Immersive broadcast solutions have to be flexible to accommodate local regulatory requirements and adapt to the local environments.

Cisco’s Immersive Private 5G Broadcast Architecture

To address the above requirements for immersive broadcast networks, Cisco established an architecture based on Private 5G. The architecture leverages a private 5G cellular transport network to aggregate high-end, high definition video flows from TV cameras, pen cameras, or even smartphones, as well as 5G gateways for the high-bandwidth upload of photo camera content towards the broadcast centers in the OB VAN or production studios. The architecture is made up of the following components:

Video endpoints

Video endpoints are responsible for the capture of the live events. These can be high-end TV cameras from vendors such as Panasonic or Sony equipped with a codec (e.g. Haivision’s Pro460). Highly mobile and immersive footage can be captured by pen cameras or even smartphones (Apple iPhone, Samsung Galaxy), equipped with appropriate codec software (e.g. Haivision MoJoPro). Footage from photo journalists can be captured and collected using a cellular gateway, ideally also leveraging current smartphones in a tethering mode.

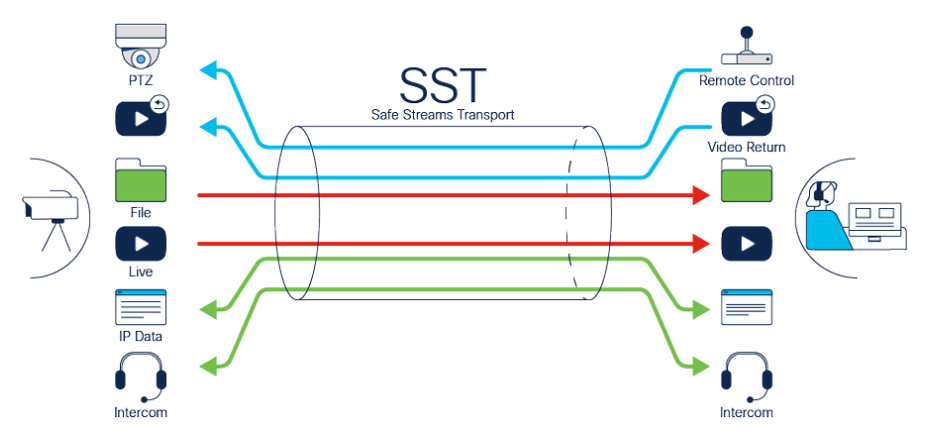

The architecture demarcation point is the codec installed in the cameras. These are responsible for encoding the video and audio feeds, for transmission over IP towards the production site. A control channel from the production suite allows for remote control of the video capture parameters, such as adjustment of the encoding type (CBR,VBR), or rate. For example, Haivision developed the Safe Stream Transport (SST) protocol to multiplex audio, video and control channels in an IP tunnel for reliable transmission and control. This technology supports for example:

- Adaptive forward error correction.

- Packet re-ordering.

- Automatic Repeat Request (ARQ).

- Regeneration of missing packets.

- Adaptive bit rate encoding.

- Aggregation of multiple traffic flows (voice, video, control) in a large transmission pipe.

This tunnel terminates in the corresponding decoding function (Haivision Streamhub) in the production suite (as illustrated in Figure 2 below).

Figure 2 – Private 5G content acquisition network (Radio Access Network)

Connectivity between the mobile endpoints is provided by a private 5G network. Such networks offer dedicated private spectrum to the operators with is difficult to interfere with. Such spectrum has to be acquired from the regulator in the country of operation, ideally in the midband spectrum (3.5GHz band).

Private 5G radios, for example from Neutral Wireless, are configured to send and receive in the acquired spectrum band are deployed in the event locations to provide the air interface towards the video / 5G gateway endpoints. For a large location such as a stadium, only a few radios are required1. Private 5G networks are able to operate at higher power levels and can thus cover a larger area as compared to current Wi-Fi access points. Midband spectrum also allows for higher throughputs to be achieved over the cellular connection as compared to public spectrum bands (below 1GHz).

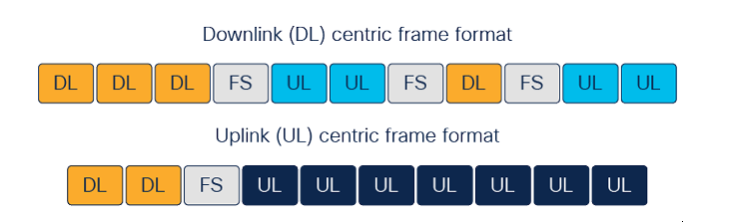

The Radios are configured for uplink centric traffic patterns to ensure that the bandwidth requirements for the video feeds are met. As illustrated in Figure 3, such a frame format allocates more time on the air interface to uplink traffic in contiguous timeslots. The air interface slots can also be used more efficiently by recuperating two of the Frame Separator (FS) slots. Note that radios typically offer multiple downlink-centric frame formats.

Figure 3 – Example of Downlink and Uplink centric Frame Formats

As is typical for a 3GPP based architecture, the radios consist of the actual antennas (remote radio heads, RRH) emitting the radio waves, as well as a gNB (baseband Unit, BBU) running the associated communications protocol stack. The BBUs perform L1 signal and MAC processing, execute the Radio Link Control Protocol (RLC) and interface to the Private 5G packet core. The Remote Radio Units (RRUs) are responsible for RF transmission and reception, amplification of the signal, performing RF filtering, and beamforming / Multiple Input-Multiple-Output (MIMO). Note that multiple radio antennas can be connected to the same BBU, thus realizing already a level of content aggregation. BBU typically come with 10Gbps Ethernet uplinks, allowing them to carry multiple compressed video feeds. The BBU and RRHs are connected via a CPRI interface (not based on IP)f. In the case of Neutral Wireless, the RAN is based on a software-defined radio stack running, where the BBU is running on a standard Intel-based x86 compute platform.

Content acquisition backhaul network

The BBU devices are connected to a site-local campus aggregation infrastructure to reach the private 5G core network functions and ultimately the production suite in the OB VAN / Production site. Standard Cisco Ethernet Catalyst switches or Cisco NCS-series switches are part of the architecture for this purpose. Depending on the RAN equipment, this Ethernet network may need to distribute timing information to keep the radios synchronized. Cisco supports the Precision Timing Protocol (PTP) in such cases. A grand-master clocking signal can be fed into such a network, to be subsequently distributed throughout the infrastructure to reach the BBU equipment.

In an immersive broadcast network, ensuring low latency for video streams is critical. The ethernet-based content acquisition backhaul network may thus be configured for different quality-of-service mechanisms, especially when traffic from the 5G cameras is mixed with non-critical traffic in the network.

In some environments, the private 5G core may beyond the reach of a physical fibre connection. In such cases, Cisco’s Ultra-reliable Wireless Backhaul (CURWB) can be deployed to carry the aggregated RAN traffic. CURWB deploys back-to-back antennas configured in the unlicensed spectrum to deliver point-to-point, point to-multipoint, or mesh connections over multiple miles / kilometers. The optional CURWB contribution backhaul network features highly reliable links based on MPLS with Forward Error Correction (FEC), and is thus ideally suited to meet the requirements of immersive broadcast networks.

Private 5G core

The private 5G core provides the required network functions to manage the cellular 5G connections initiated by the 5G interfaces in the endpoint codecs. In particular, the core provides the data plane for the 5G sessions (PDU session establishment and termination, GTP tunnel encapsulation /decapsulation), as well as associated control capabilities that govern the session characteristics (e.g. QoS settings, PDU session policies, IP Address assignment).

The Private 5G core is delivered by Cisco in the Immersive Broadcast architecture. To meet the high-availability requirements of such networks, a cluster of three Cisco UCS servers are deployed to run redundant instances of the 5G core network functions: the UPF for the data plane, the AMF for the mobility and access management, the SMF for session management, as well as several auxiliary functions to assist with policy and security (endpoint / user AAA, management plane encryption etc.). In support of the immersive broadcast network, the core has to support the following functionality:

- High-availability in support of critical media acquisition for millions of spectators.

- Traffic Segmentation with VLANs, VRFs, and different DNNs.

- Small form factor deployment to be easily moved from event to event.

- Local authentication to guard against internet failures.

- Local policy assignments, especially in support of Guaranteed Bit Rate services.

- Local monitoring capabilities.

Production equipment

The Private 5G core functions are connected to the production equipment for processing of the video feeds, typically leveraging a Cisco Catalyst switch deployed in the OB VAN or production site. A key component is the decoder for the Video flows. For example, Haivision offers the Streamhub solution to provide the counterpart to the codec function that is hosted in the endpoints. The above mentioned SST tunnel that multiplexes the video and audio feeds and the control channel into an application layer tunnel is terminated inside the Streamhub. This enables the production crew to control the content acquisition remotely by setting the encoding remotely for all connected cameras (CBR, VBR), or the encoding rate. The solution also provides for automatic repeat request (ARQ) functions to mitigate packet losses, or handle packet re-ordering events that may have happened during the transmission over the private 5G network.

Management and Operations stack

The different solutions – video stream control, RAN, contribution access network, Private 5G core – all come with their respective management solutions. Some of these components are cloud managed, such as the Private 5G core. Cisco also provides a cloud based end-to-end observability dashboard for the architecture to show real-time status of the pertinent components, such as session status or Private 5G core status. To ensure that cloud-based management does not disrupt the live event operations, access to the cloud has to be highly reliable. For this reasons multiple cloud-connectivity WAN connections can be deployed: (SD)-WAN, public cellular backhaul using LTE or 5G, as well as a satellite connection. The Cisco IR router series is integrated in the solution for this purpose. Note that the content distribution network to reach is currently assumed to exist.

Figure 4 – Cisco’s Immersive Broadcast Contribution Network Architecture

Benefits of Private 5G Networks for immersive broadcast networks

The Cisco immersive broadcast architecture is based on a private 5G network to connect the various types of cameras to the production facilities in the OB Van or the Media centers. Multiple use-cases (Photo upload, smartphone cameras, professional TV cameras) can be realized over the same contribution access network infrastructure. Live sports and entertainment events can thus have multiple avenues for monetization. The air interface is based on licensed and dedicated spectrum. The Private 5G operators have to acquire spectrum bands from the national regulators, typically in the range of 20 MHz to sometimes multiple 100MHz bands. While this comes with an additional cost and administrative overhead, it secures the air interface from other users, thus limiting interference from 3rd party sources.

Private 5G networks are based on 3GPP cellular technology that has been proved and evolved in the macro cellular networks for decades. Mobility for 5G endpoints – in this case TV cameras, smartphones, and photo cameras – is thus inherently built in. The underlying technology stack also supports the quality-of-service requirements mentioned above in terms of bandwidth guarantees or low-latency communications. Sophisticated policies to govern user traffic segmentation, authentication, or security are also inherently part of the stack.

A key advantage of private 5G as a contribution access transport technology is the wireless coverage it provides. In many event locations, only a few radios are required to light up the field of play. With proper radio planning based on site surveys, these networks can reach up to ¾ of a mile (approx.1 km) based on the higher transmit power budget of 5G radios among other factors. This makes the technology particularly suitable for large venues and outdoor events.

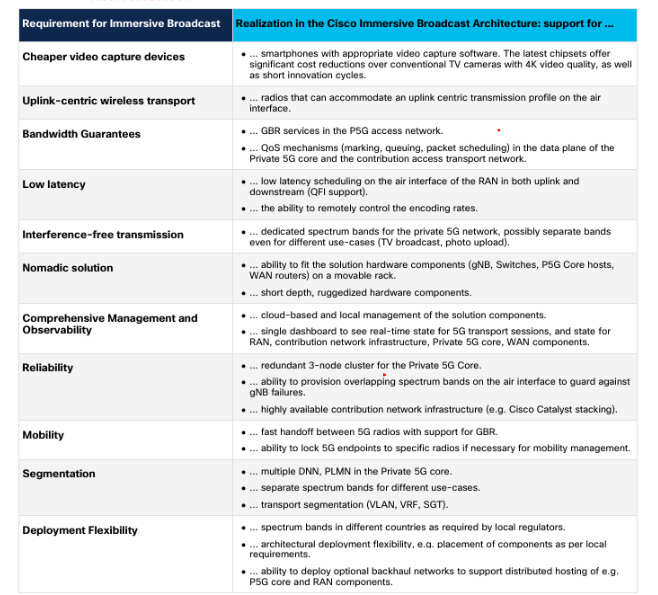

Table 1 provides a summary of how the requirements for immersive broadcast networks listed above are realized by the Cisco Solution:

Table 1 – Fulfillment of Requirements for Immersive Broadcasting

Use-case example: Major world-wide multi-sports event

The value of an immersive broadcast network based on Cisco’s Private 5G solution was demonstrated at a major sports event. This event took place for over 2 weeks in multiple venues and covered multiple sporting disciplines on water (sailing, windsurfing), in indoor and outdoor stadiums (aquatics, athletics, gymnastics), as well as public venues. The event was watched by over 1M spectators live during the 2 weeks, and re-broadcast to national broadcasters world-wide.

Requirements

The organizers of this event wanted to showcase private 5G in a broadcast environment to show how live events could be transmitted wirelessly with low-latency and guaranteed bandwidth. They also wanted to create multiple use-cases, for example by offering tiered near-real-time upload services for foto journalists. This use case was important to solidify the economic viability of deploying a private 5G network infrastructure for the event. The solution had to meet the following high-level requirements:

- Support for broadcast video content acquisition with professional 4K/HD TV cameras connected over a private 5g network with a glass-to-glass (encoder input to decoder output) latency of less than 90ms. ◦ The cameras offer integrated audio-video main feed, video return, camera-control, intercom, IEM, microphone.

- Support for content acquisition with standard commercial smartphones, such as the Samsung Galaxy series.

- Support for near-real-time still camera photo uploads.

- Support for a nomadic solution that can easily be moved around the venues and between sites, and can be re-used for future events.

- Adherence to the overall project budget.

- Support for three main event location types

- Public venues, where immersive video+audio is to be captured using 250+ regular smartphones with a guaranteed bit rate. The smartphones are assumed to be mobile and travelling along a pre-defined trajectory.

- Stadium venues, where 4K/HD broadcast cameras capture high quality, low-latency video streams with constant bit rates from the field of play.

- Maritime venues, where immersive video and audio streams are captured with 20+ pen cameras and regular smartphones mounted on sailboats.

- In all venues, the still photographer use-case for near-real-time uploads has to be supported for up to 50 journalists.

- Support for high-availability: the public nature of the event implies that there is zero tolerance for any failure.

Solution Architecture

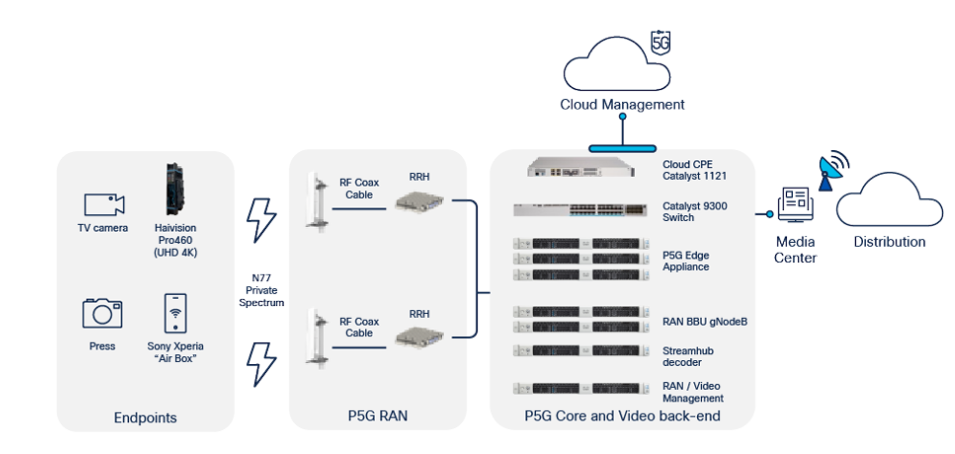

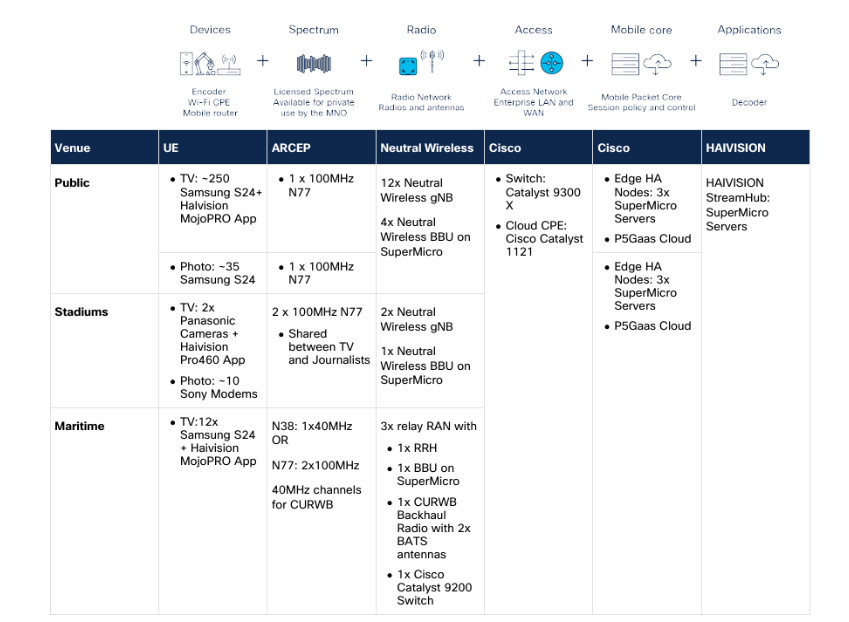

To meet these requirements, a consortium of partners including Haivision, Neutral Wireless, Intel, Orange, and Cisco was formed. Haivision provided the video capture, application layer transmission (encoding /decoding) and processing capabilities. The RAN components were supplied by Neutral Wireless, who brought extensive experience in broadcast networking over cellular to the team. All hosting hardware was Intel-powered. Cisco contributed the Private 5G Core functions, and together with Orange was responsible for the systems integration.

The immersive contribution network architecture was based on the design described in Exhibit 4. Specifically, a private 5G core was installed in each venue, along with the uplink connectivity to the broadcast distribution network and to the internet for cloud management. However, the RAN architecture varied slightly for each venue type to accommodate the specific requirements in terms of bandwidth, number of endpoints, and radio conditions. Table 2 summarizes the architectural specifics for each venue type.

Table 2 – Immersive Broadcast Solution Components and Vendors

Public events

For public outdoor locations, live video of the events was captured on barges that flowed down a river. Samsung S24 Smartphones were either fixed on barges or held and operated by athletes. In addition, journalists also travelled along the river on smaller boats to capture still photos of the action. The smartphones were running the Haivision MoJoPro app to acquire the video, encode the stream, and provide the video control to the producer in the centralized production studio. The handheld phones were connected to the Private 5G Radio Access Network from Neutral Wireless. A total of 12 antennas were installed on bridges, pointing upstream and downstream to provide coverage over the river for the devices to connect. To separate the video feeds on the barges from the journalist boats, two separate spectrum bands – each of width 100MHz – were configured. This guaranteed the bandwidth for both types of use-cases, and that at no time photo upload from journalists impacted the live video feeds from the athlete’s or mounted phones.

The antennas with their corresponding Remote Radio Heads (RRH) were connected via fibre to four base-band units (BBUs), which in turn were backhauled over a switched Catalyst 9300 transport infrastructure to the Private 5G core and the production suite. Both the P5G core and the Haivision stream control appliances were centrally hosted in a production studio.

The number of radios installed on the bridges had to be limited to meet the budget requirements of the operator. This implied that the coverage along the river was not contiguous. As barges and boats floated downstream, the video feeds were periodically lost due to a lack of radio coverage. The design of the mobility handoff for the video sessions between private 5G radios was thus particularly important. The RAN configuration was optimized such that the Samsung S24 smartphones reconnected to a downstream radio after passing through a dark zone with minimal disruption.

Two separate Cisco Private 5G Cores were used to continue the channel separation of video traffic from journalist upload traffic. Both cores had separate DNNs configured, and fed into the same Production toolstack for post-processing by producers. The DNNs were identified by a specific 5QI value, encoding the permissible Aggregate Maximum Bit Rate (AMBR) values to the Samsung devices in both the uplink and downlink direction. These settings ensured that any specific mobile device was always limited to a maximum bandwidth on the air interface by the radio scheduler, and therefore that no device could starve out others.

The production team in the centrally located studio facilities received the live video feeds from the over 200 Samsung S24 devices, as well as fixed cameras not connected over the Private 5G access network. In the production suite FTP servers were hosted to receive the journalist’s still camera uploads. A redundant pair of Haivision StreamHub decoder applications were hosted on Supermicro servers. Also, the production suite was connected to the Internet and the broadcast distribution over redundant WAN connections (based on Cisco Catalyst 1121 Series Routers.

Figure 5 Illustrates the use-case for public venues at a high level.

Figure 5 – High-level Architecture for public Venues

A total of over 400 GB of video traffic was generated by the Samsung smartphones, in addition to the over 25GB of still photographs uploaded by journalists.

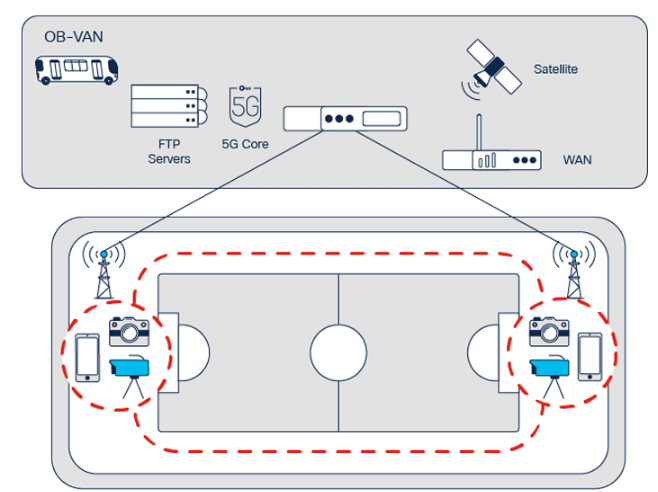

Stadium competitions

The immersive broadcast architecture in stadium venues also had to accommodate the capture of live events as well as still photograph uploads by journalists. The video footage in this case was captured by 4K / HDTV cameras enhanced by a Haivision Pro460 stream controller. This module provides encoding in hardware which proved to be critical to meet the stringent 90 ms glass-to-glass latency requirements by the producers. In each stadium venue, two such cameras were deployed on opposing ends of the field of play to capture athletics, swimming, or gymnastics competitions. These cameras generate video feed traffic in the order of 60-80 Mbps.

In this case two Neutral Wireless Radios (antennas and RRH) were mounted in the grandstands to cover the field of play. Different to the public venue locations, two 100MHZ channels were used jointly between the journalists and the TV cameras. To guarantee that the journalist still photo uploads to not impact the video feed capture, QoS was configured throughout the contribution access network. Specifically, the TV cameras were associated with a higher-priority 5QI value providing Guaranteed Bit Rate (GBR) services. This 5G service allowed the operators to reserve a minimum bit rage (GBR) for each TV camera, and also limit the Maximum Bit Rate (MBR). This configuration provided a higher priority on the air interface. The spectrum band used in these locations accommodated a 100MHz guard band on the upper end of the spectrum to ensure no interference from other wireless sources.

The two radios in each stadium location were allocated different frequency channels, each serving a dedicated TV camera. Each TV camera was thus locked to a particular radio, assigning each to half of the event location. This design was chosen to avoid unintended handover of a camera to the ‘remote’ radio, which would have resulted in unacceptable Xn handover delays and cell or frequency re-election.

Ten journalists from different press agencies were enabled for premium photo upload services. The journalists traffic used the same bands on the air interface, albeit with a lower priority than the GBR traffic from the TV cameras. Traffic from these premium journalists was associated with AMBR values for uplink and downlink to ensure no single camera can starve out others. For these press agency uploads, a separate DNN as configured per agency, which in turn allowed uploads to agency-specific FTP servers in the production suite. Additional SIM cards were issued to the remaining journalists for upload via the Internet. This group received the lowest priority uplink service, providing the ability to use any unused bandwidth capacity in a best-effort manner.

All Stadium events were configured for the same PLMN. This allowed journalists for example to easily move to different event locations.

Figure 6 – High-level Architecture for Stadiums

In these events, the 4K/HDTV TV cameras generated around 1500 GB of live streaming traffic with GBR quality. The journalists uploaded up to 250 GB of still photographs.

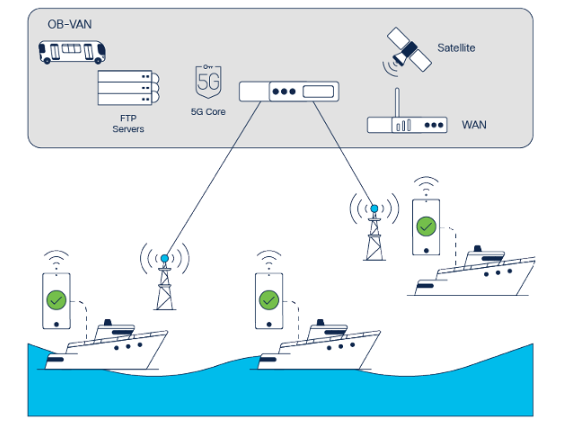

Sailing competitions

The use-case for immersive video in the sailing competitions enabled capturing live video and audio action with pen cameras mounted on helmets, or Samsung S24 cameras fixed on booms. Spectators consuming the live streams thus got to re-live the experience of the athletes on the competitive sail boats or surf boards, not just from afar by a land-based or helicopter based camera.

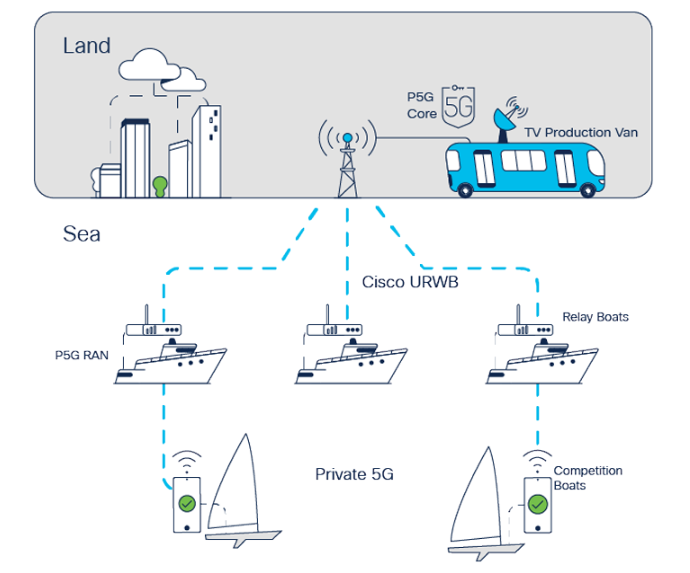

The requirements for the sailing competitions to capture immersive live videos from athletes were significantly different than for stadiums or public locations. The field of play was several miles / kilometers at sea, with a diameter of also several miles/kilometers. Providing coverage for such a large area from antennas on land in was physically not possible in the allocated 5G midband spectrum.

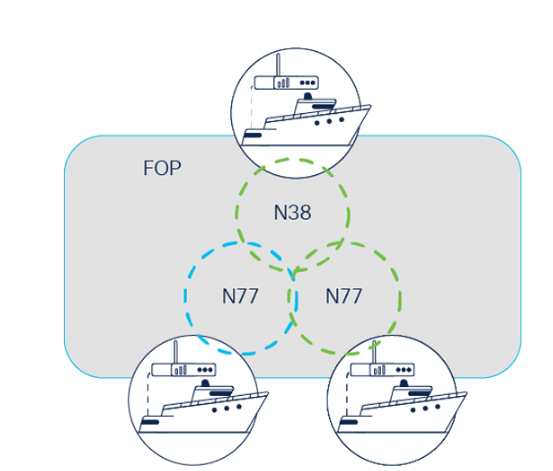

The live contribution network architecture in this case consisted of a private 5G RAN hosted on relay-boats near the field-of-play. Video feeds captured by the Haivision MoJoPro application running on up to 12 Samsung S24 were transmitted over two 100 MHz N77 channels or one 40MHz N38 channel bands to one of three relay boats hosting the 5G RAN antennas, RRHs and BBUs. The private 5G data plane sessions were then backhauled over a Cisco Ultra-reliable wireless backhaul (Cisco URWB) network to the shore. This solution was based on 40MHz channels in unlicensed 5GHz spectrum, and consisted of a pair of point-to-point antennas between the three boats and Cisco URWB antennas on shore. BATS antennas were mounted on special racks on the relay boats to mitigate the movement caused by sea currents, swells, or the wind. Cisco URWB radios are able to transmit at higher power levels outdoors, thus being able to bridge the distance between the relay-boats and shore. Furthermore, the high-availability mechanisms offered by Cisco URWB ensured that a reliable point-to-point link was established between each boat and the antennas on land. Additional resilience was provided by deploying redundant pairs of such backhaul links from each of the relay boats.

Traffic from the land-based Cisco URWB antennas was then transported via fibre links to the production suite hosted in the OB VAN, for post-processing as in the other events.

Figure 7 provides a high-level illustration of the maritime immersive broadcast architecture.

Figure 7 – High-level Architecture for maritime Events

The P5G RAN design had to accommodate handover of the smartphones from one relay boat to another. On each relay-boat two Radios were transmitting on different cells in different directions to achieve a better coverage of the field of play. Handover from one cell to another anchored on the same relay boat was transparent to the Private 5G core, offering optimal handover experience. Handover of a smartphone between two cells of different relay-boats had to be supported by the Private 5G core. A minimal additional latency of 10ms was incurred in this case. This latency was acceptable to the producers, since they had 12 video feeds at their disposal to select for broadcasting.

Figure 8 – Spectrum Architecture for maritime Events

A total of over 430 GB of live immersive video traffic was generated by the 12 cameras in this competition.

Challenges

To realize a project of this magnitude numerous challenges had to be overcome. The following challenges were particularly noteworthy from a technical perspective.

- Systems integration: integrating the various solution components – endpoint hardware, video capture and encoding, the private 5G Radio access network, the private 5G core, transport and backhaul networks, as well as production suite functions – required intricated integration efforts. Multiple configuration parameters and software versions had to be aligned to ensure that the overall architecture offered a single solution. The resulting architecture presented in this paper thus offers a true end-to-end functioning architecture.

- Spectrum acquisition: the realization and success of the live broadcast use-case relied on two 100MHz spectrum bands in all event locations for the duration of the events. Channels of this width are currently considered particularly valuable and thus required the corresponding negotiations with the national regulator.

- Spectrum management: despite the dedicated allocation of spectrum bands, some locations experienced significant interference from other sources. For example, unplanned ad-hoc radios by 3rd party operators were installed that caused such interference. In those cases, the spectrum was protected by additional filters to ensure that only the desired 5G frequencies were received by the radios. Also, additional guard bands were reserved to protect the allocated 100MHz channels from adjacent channels in higher and lower frequency bands.

- End-to-end latency: in the stadium locations achieving the required end-to-end latency of less than 90ms required careful tuning of the various parameters. A particular challenge was to maintain this latency during an Xn handoff for the TV cameras in the stadiums. Such an event would occur if a camera operator freely moved throughout the stadium and entered the range of the opposing radio (with a better signal strength). To prevent such undesirable latencies the TV cameras with the Haivision Pro460 encoders were ‘locked’ into the desired radio antenna by configuration. The camera operators thus concentrated on the events in their respective half of the stadiums.

A project of this magnitude also faced multiple project management challenges:

- Test access: to test the fully integrated end-to-end solution from the different supplying vendors (Haivision, Neutral Wireless, Intel-based servers, Cisco), multiple live integration tests had to be performed. Live testing was particularly critical to ensure that the 5G end devices worked as desired with the private 5G access network. Access to the actual sites was also mandatory to perform the proper radio planning (where to install the radios, understanding the signal propagation behavior and sources of interference). Coordination of the necessary test slots proved challenging, since the event locations imposed strict security controls. Also, the sites were at times reserved for other activities which prevented ready access to sufficient tests slots.

- Cost Control: as is typical for any solution, the overall solution had to meet the allocated budgets for both capital and operational expenditures. For an optimal Private 5G-based network architecture providing full coverage for the handheld Video phones (especially in public sites!), the desired number of radios should have increased significantly. This exceeded the allocated budget. The cost envelope was maintained by accepting gaps in the coverage areas for public events, and by leveraging the nomadicity of the solution. Some of the events did not run in parallel, so the packet core of one of the stadium locations could be re-used for the public events. This reduced the overall costs for the packet core.

Summary

Immersive broadcast content acquisition networks for sports, media, and entertainment offer a complete new experience to consume live content for remote spectators world-wide. Viewers can experience the action from the perspective of the athlete on boats, bikes, or the competition floor, and thus be closely engaged with live events. Such networks narrow the gap between watching a competition live or remote, and thus open new revenue streams for content acquisition organizations.

The immersive broadcast architecture presented herein consists of a complete ecosystem of partners delivering and end-to-end solution. The capture of live video streams and associated encoding and control functions were delivered by Haivision. Both hardware-based encoding or software based solutions running on smartphones encode the live video streams with high quality and reliability and transmit those over a private 5G network to the production studio. The architecture is fully reliant on an innovative private 5G transport infrastructure delivered by Cisco (Private 5G Core) and Neutral Wireless (RAN) on standard Intel-based servers. Such networks offer dedicated spectrum that can be optimized for upload centric traffic flows that almost entirely mitigate interference from foreign sources. Traditional cellular macro networks or private Wi-Fi based solutions – being used by thousands of consumers in live broadcast environments – are not suitable in such environments, since they cannot guarantee the required bandwidth for TV cameras or journalists still cameras, The private 5G network presented herein also fully supports the required quality of service, reliability, nomadicity and local management capabilities requiring for such high-profile events.

The architecture was implemented at a major sports event, supporting a multitude of competitions in stadiums, pools, and even at sea. The live video feeds captured during these events contributed to a heightened experience for remote viewers. The overall success of the event provided the proof-point to make immersive broadcast content acquisition networks the de-facto standard for future live sports, media, and entertainment events (see [8] on the different philosophy). Additional services for event locations such as distributed ticketing with handheld devices in the parking lots, access control, or safety communication for event staff can additionally be considered for this architecture to solidify the business cases.

References

[1] Statista, https://www.statista.com/topics/12442/paris-2024-olympics/#topicOverview, last reference on 7. October 2024.

[2] International Olympic Committee (IOC), https://olympics.com/ioc/news/paris-2024-record-breaking olympic-games-on-and-off-the-field, last referenced on 7. October 2024.

[3] M. B. Waddell, S. R. Yoffe, K. W. Barlee, D. G. Allan, I. Wagdin, M. R. Brew, D. Butler, R. W. Stewart, “5G Standalone Non-Public Networks: Modernising Wireless Production”, 17. September 2023, Available: https://www.ibc.org/technical-papers/ibc2023-tech-papers-5g-standalone-non-public-networks modernising-wireless-production/10246.article, last referenced on 7. October 2024.

[4] J. T. Zubrzycki and P. N. Moss, BBC R&D White Paper WHP 052, “Spectrum for digital radio cameras”. https://www.bbc.co.uk/rd/publications/whitepaper052, last referenced on 7. October 2024.

[5] Cisco, “Cisco IP Fabric for Media Design Guide”, https://www.cisco.com/c/en/us/td/docs/dcn/whitepapers/cisco-ipfm-design-guide.html, last referenced on 7. October 2024.

[6] Haivision, “Broadcast transformation Report”, 2024, https://www3.haivision.com/broadcast-report 2024, last referenced 9. October 2024. [7] Live Production, “HD Production: FIFA World Cup 2010”, https://www.live-production.tv/case studies/production-facilities/hd-production-fifa-world-cup%E2%84%A2-2010.html, last references on 9. October 2024.

[8] World Finance, “Paris set for Olympics hosting gold” https://www.worldfinance.com/special reports/paris-set-for-olympics-hosting-gold, last references on 9. October 2024.